Closing: Jul 15, 2024

9 days remainingPublished: Jun 27, 2024 (10 days ago)

Job Requirements

Education:

Work experience:

Language skills:

Job Summary

Contract Type:

Sign up to view job details.

What we are looking for in you:

- Proven hands-on experience in software development using Python

- Proven hands-on experience in distributed systems, such as Kafka and Spark

- Have a Bachelor’s or equivalent in Computer Science, STEM, or a similar degree

- Willingness to travel up to 4 times a year for internal events

Additional skills that you might also bring:

- You might also bring a subset of experience from the followings that can help Data Platform to achieve its challenging goals and determine the level we will consider you for:

- Experience operating and managing other data platform technologies, SQL (MySQL, PostgreSQL, Oracle, etc) and/or NoSQL (MongoDB, Redis, ElasticSearch, etc), similar to DBA level expertise

- Experience with Linux systems administration, package management, and infrastructure operations

- Experience with the public cloud or a private cloud solution like OpenStack

- Experience with operating Kubernetes clusters and a belief that it can be used for serious persistent data services

Responsibilities

What we are looking for in you:

- Proven hands-on experience in software development using Python

- Proven hands-on experience in distributed systems, such as Kafka and Spark

- Have a Bachelor’s or equivalent in Computer Science, STEM, or a similar degree

- Willingness to travel up to 4 times a year for internal events

Additional skills that you might also bring:

- You might also bring a subset of experience from the followings that can help Data Platform to achieve its challenging goals and determine the level we will consider you for:

- Experience operating and managing other data platform technologies, SQL (MySQL, PostgreSQL, Oracle, etc) and/or NoSQL (MongoDB, Redis, ElasticSearch, etc), similar to DBA level expertise

- Experience with Linux systems administration, package management, and infrastructure operations

- Experience with the public cloud or a private cloud solution like OpenStack

- Experience with operating Kubernetes clusters and a belief that it can be used for serious persistent data services

What your day will look like:

- The data platform team is responsible for the automation of data platform operations, with the mission of managing and integrating Big Data platforms at scale. This includes ensuring fault-tolerant replication, TLS, installation, backups and much more; but also provides domain-specific expertise on the actual data system to other teams within Canonical.

- This role is focused on the creation and automation of infrastructure features of data platforms, not analysing and/or processing the data in them.

- Collaborate proactively with a distributed team

- Write high-quality, idiomatic Python code to create new features

- Debug issues and interact with upstream communities publicly

- Work with helpful and talented engineers including experts in many fields

- Discuss ideas and collaborate on finding good solutions

- Work from home with global travel for 2 to 4 weeks per year for internal and external events

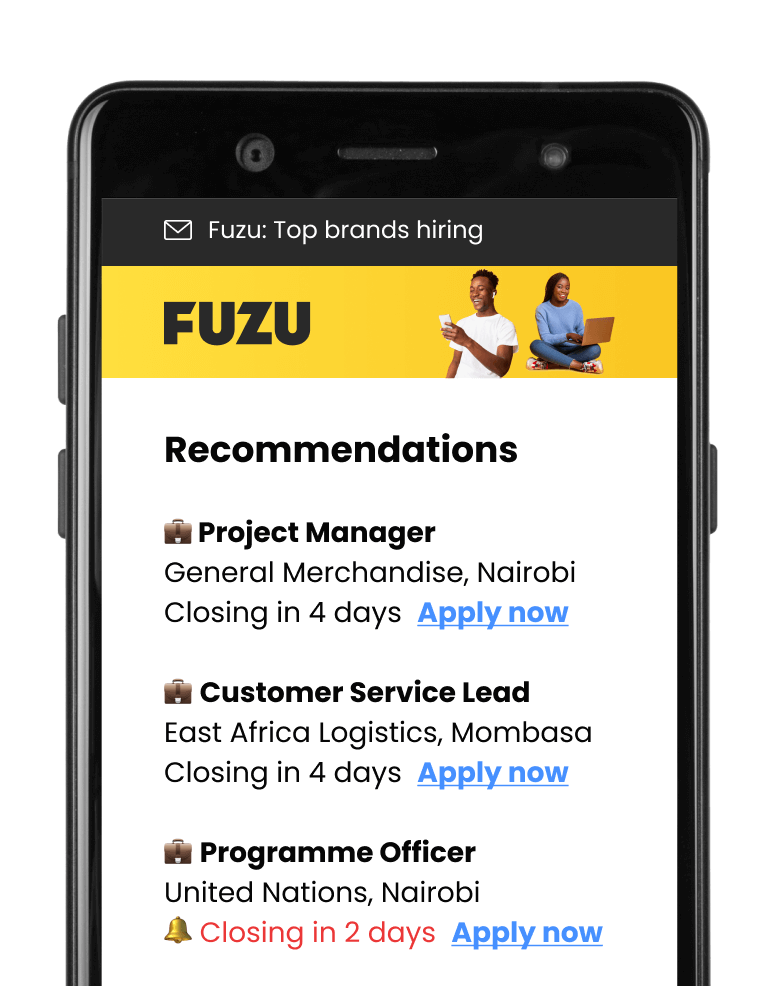

Applications submitted via Fuzu have 32% higher chance of getting shortlisted.